What is Location Data? A Complete Guide for Public and Private Sector Teams

Location data, also known as geospatial data or mobility data, captures the movement of devices and objects through physical space, transforming real-world activity into actionable intelligence.

Introduction

Welcome to this comprehensive overview of location data. Whether you're a product leader building location-powered applications or a data analyst seeking to understand mobility patterns, this resource will help you understand what location data is, where it comes from, and how it creates value across sectors.

This guide covers the fundamentals of location data collection and processing, explores key use cases across public and private sectors, and explains what separates raw location signals from intelligence-ready data that can power mission-critical decisions.

What is Location Data?

Location data is the digital record of movement produced by GPS-enabled devices as they travel through the physical world. While basic location information can be derived from IP addresses, the most valuable and precise location data comes from GPS signals collected from mobile devices with explicit user consent.

These signals capture where devices have been, when they were there, and how they moved between locations, creating rich datasets for understanding real-world behavior, population movement, and activity patterns. This is why location data, representing human mobility patterns, is so powerful for applications across industries and government functions.

For organizations working with location data, the challenge is transforming billions of raw data points into reliable intelligence that supports accurate analysis and informed decision-making.

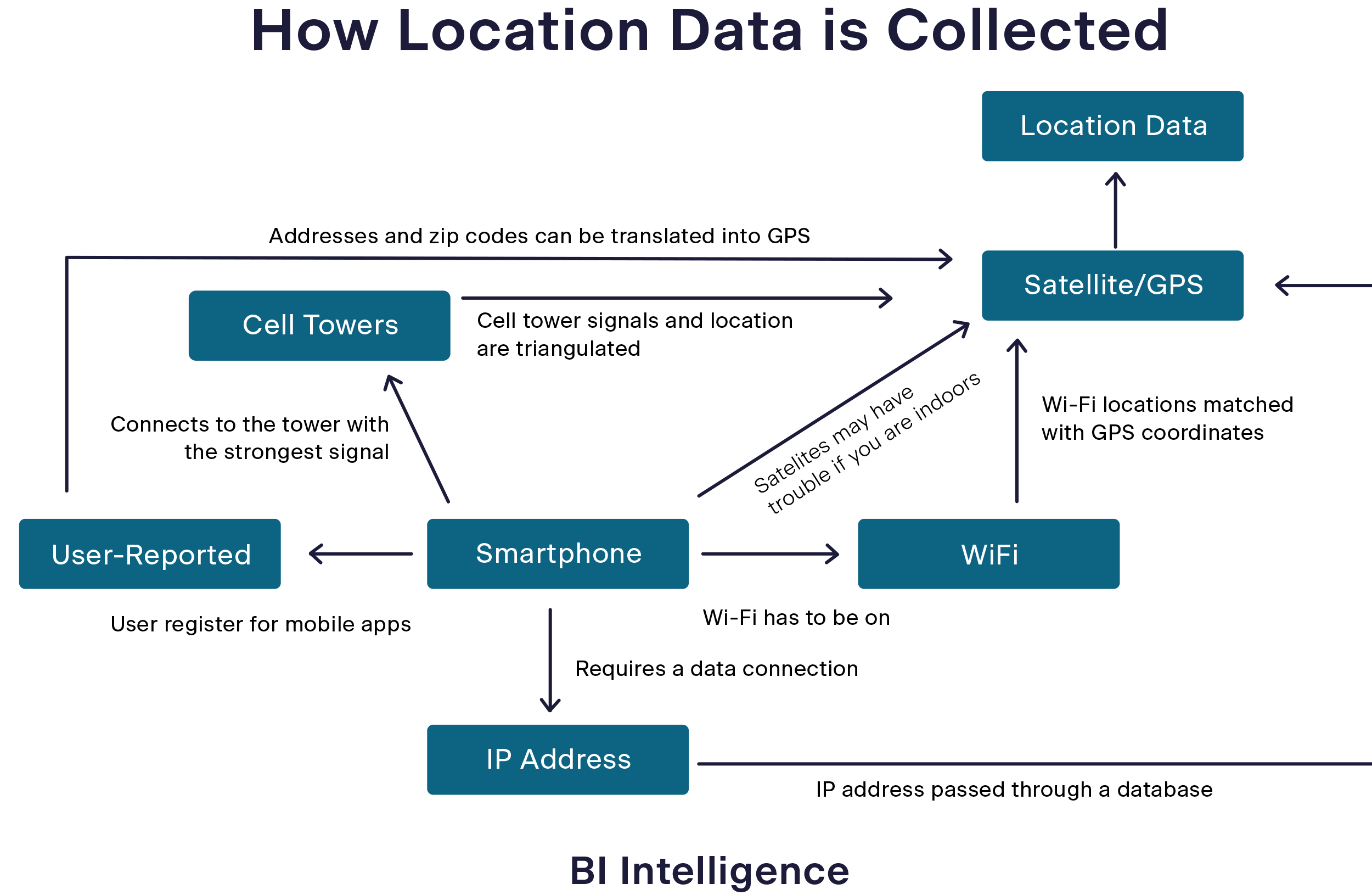

Where Does Location Data Come From?

Location data originates from GPS-enabled devices, including mobile phones, tablets, connected vehicles, IoT sensors, and wearable technology. The most reliable and privacy-compliant sources come from mobile applications that collect location information with explicit user consent, such as navigation apps, weather services, and delivery platforms.

The Collection Process

The technical collection process involves software development kits (SDKs) embedded within mobile applications. These SDKs capture multiple signal types:

- GPS coordinates providing latitude and longitude

- Cellular tower information offering location data when GPS is unavailable

- Wi-Fi network data enabling location determination in urban environments

This commercially available data is inherently anonymous. Location data providers do not collect personal information such as names, phone numbers, or email addresses. Instead, they work with pseudonymous identifiers, such as mobile advertising IDs, that are not directly linked to individual identities.

Once collected, these signals are transmitted to data aggregators or providers who merge them with other data records and make them available for purchase. However, not all providers process this data equally. Many simply resell raw signals, while a select few invest in extensive validation, quality control, and enrichment to transform raw data into reliable intelligence. Venntel is one of very few companies that take this processing even further, with forensic flagging, signal enrichment, and analytic capabilities that provide an in-depth understanding of each location signal for mission-critical analysis.

Consent and Privacy

Responsible location data collection operates on an opt-in consent model. Users explicitly agree to share their location information through app permissions, and reputable providers maintain rigorous privacy controls including the removal of signals from sensitive locations and compliance with evolving privacy regulations.

Raw Data vs. Intelligence-Ready Data

Not all location data delivers equal value. One of the most critical distinctions for teams evaluating location data sources is understanding the difference between raw signals and intelligence-ready data.

The Location Data Quality Challenge

Industry research reveals that up to 50 percent of location signals from some sources may be unreliable or flawed. Common quality issues include:

- Synthetic signals generated by bots or emulators rather than real devices

- Replay data consisting of old signals with altered timestamps

- Device spoofing where locations are deliberately falsified

For analysts and data teams, these quality issues can create significant challenges. Unreliable data introduces noise and can lead to fundamentally flawed analysis, wasted investigative resources, and incorrect operational decisions.

What Makes Data Intelligence-Ready?

Transforming raw location signals into intelligence-ready data requires sophisticated processing:

- Deduplication and signal merging to eliminate redundant data from multiple sources

- Schema validation to remove incomplete records missing essential data like latitude, longitude, or timestamps

- Forensic analysis and enrichment adding built-in analytics, behavioral flags, and context to each signal that helps analysts assess reliability and detect anomalous patterns

- Privacy screening to remove data from sensitive locations and ensure regulatory compliance

When enterprises need data from a high-population country or across the globe, data storage costs can become a significant line item. Basic deduplication and signal merging across commercially available datasets eliminate redundant data from multiple sources and can reduce storage costs by 20-30 percent.

Schema validation removes the incomplete records. Signals missing essential information like latitude, longitude, timestamps, or other critical metadata are filtered out, ensuring analysts work only with usable data.

Forensic analysis and enrichment add built-in analytics to each signal, including behavioral flags that identify things like implausible movement patterns, replay data, or devices that might be driving. This layer of intelligence helps analysts quickly assess which signals warrant investigation and which represent noise or synthetic activity.

Privacy screening ensures compliance by removing data from sensitive locations. Responsible providers maintain comprehensive databases of protected areas, including healthcare facilities, places of worship, and other sensitive sites, automatically filtering signals from these locations.

This processing transforms massive volumes of raw signals into curated intelligence that analysts can trust for mission-critical applications, from understanding population movements during emergencies to identifying patterns relevant to security operations.

Understanding Signal Precision in Location Data

Not all location data signals offer the same level of precision. GPS-derived signals provide the highest precision, using satellite signals to pinpoint location within meters. Cell tower triangulation offers moderate precision, often identifying general area or neighborhood but lacking building-level accuracy. IP-based geolocation typically provides only city-level precision. The collection method fundamentally determines how precise a location signal can be.

Environmental factors further affect the accuracy of GPS signals. Urban environments with tall buildings create "canyon effects" where GPS signals bounce off structures, causing multipath errors that degrade precision (you might experience this when using Google Maps in a parking garage). Indoor locations, dense foliage, and atmospheric conditions like storms can weaken or block satellite signals entirely, reducing accuracy or making GPS unavailable. Commercial GPS typically achieves accuracy of 5-10 meters under ideal outdoor conditions, but this can degrade significantly in challenging environments.

Modern mobile operating systems also allow users to control location precision through app permissions. Since iOS 14 and Android 12, users can grant apps either "precise" or "approximate" location access. Precise location uses GPS and provides accuracy within meters, while approximate location delivers only general area information with accuracy ranging from hundreds of meters to several kilometers. Many apps function perfectly well with approximate location. Weather apps need only city-level location, and social features often work with neighborhood-level precision. However, many, such as navigation apps, require precise location to function properly. This permission framework means that commercially available location data includes signals with varying precision levels based on both technical collection methods and user privacy choices, making it essential for analysts to understand the precision characteristics of their data sources.

Location Data Use Cases

Location intelligence powers applications across sectors, from government operations to commercial analytics. The following examples illustrate how organizations leverage location data to solve real-world challenges.

Public Sector Applications

Open Source Intelligence (OSINT)

Location data serves as an essential OSINT data source for national security and law enforcement operations. Intelligence analysts integrate location intelligence with social media, public records, and other open-source data to validate findings, detect behavioral patterns, and provide real-world context for digital footprints. This mobility intelligence supports investigations into transnational threats including drug trafficking, human trafficking, and organized crime networks. By analyzing movement patterns and spatial relationships, OSINT platforms can identify covert associations, detect surveillance activities, and uncover logistical networks that might otherwise remain hidden in disparate data sources.

Emergency Response and Disaster Management

During natural disasters or public emergencies, location data provides essential visibility into population movements, evacuation patterns, and areas requiring assistance. Emergency managers can identify stranded populations, optimize resource distribution routes, and measure the effectiveness of evacuation orders based on actual observed movement.

Urban Planning and Transportation

Government planners leverage location data to understand commuting patterns, evaluate public transit effectiveness, and inform infrastructure investment decisions. This data-driven approach helps cities optimize traffic flow, identify underserved areas for transit expansion, and measure the impact of urban development initiatives. Similarly, organizations use location data to understand how people interact with wild spaces, like state parks or nature reserves.

Commercial Applications

Digital Advertising

Adtech companies leverage location data to build audience segments and deliver targeted advertising campaigns. By analyzing location patterns and visit behavior, advertisers can identify devices that have visited specific locations or exhibit particular mobility patterns. Location data providers supply the device ID and other pseudonymous identifiers that enable advertisers to reach relevant audiences across digital channels. These identifiers can be linked with other data sources to create comprehensive audience profiles for targeting and measurement, while maintaining user privacy through anonymization.

Site Selection and Market Analysis

Retail chains and real estate investors use location data to evaluate potential locations by analyzing foot traffic patterns, demographic flows, and competitive dynamics. This objective data complements traditional demographic research with real-world behavior patterns, reducing risk in expansion decisions.

Supply Chain Optimization

Location intelligence helps logistics companies understand demand patterns, optimize inventory positioning, and predict supply chain disruptions. By analyzing historical movement data, organizations can forecast seasonal fluctuations and adjust operations proactively.

Common Pitfalls in Working With Location Data

Organizations working with location data face technical and operational challenges that require specialized expertise to address effectively.

Quality and Reliability

As discussed earlier, ensuring data quality across multiple sources represents one of the most significant challenges. Without robust validation processes, unreliable signals can compromise analysis and lead to incorrect conclusions. Organizations must implement sophisticated pattern recognition, cross-source validation, and continuous quality monitoring.

Privacy and Compliance

Location data processing must comply with an evolving landscape of privacy regulations including GDPR, CCPA, and sector-specific requirements. This involves implementing privacy-by-design architectures, managing user consent and opt-out requests, and removing signals from sensitive locations such as healthcare facilities, places of worship, and residential areas.

The technical implementation requires systems that can handle privacy controls at scale, maintain audit trails for compliance reporting, and adapt to changing regulatory requirements without disrupting operations.

Scale and Processing Complexity

Processing billions of location signals requires specialized infrastructure designed for spatial-temporal data. Traditional data pipelines often struggle with the unique characteristics of location data, including high dimensionality, complex spatial relationships, and the need for real-time processing capabilities.

Integration Challenges

Most organizations work with multiple data sources to achieve necessary coverage and scale. This creates engineering and resource challenges around schema standardization, duplication across providers, and quality normalization between sources with different collection methodologies.

How Venntel Delivers Location Intelligence

For organizations requiring location intelligence, a critical decision emerges: build internal data infrastructure or partner with a specialized provider. Building enterprise-grade location data capabilities requires massive upfront investment in data acquisition, processing systems, quality validation, privacy compliance, and ongoing maintenance.

Most organizations find that developing location data processing capabilities in-house diverts resources from core mission activities while requiring specialized expertise that may not align with organizational strengths.

The Venntel Approach

Venntel provides intelligence-ready location data that eliminates the need for organizations to build and maintain complex processing infrastructure. Rather than simply selling raw location signals, Venntel invests in comprehensive data acquisition and processing systems that transform billions of daily signals into curated intelligence.

Drawing from 15+ diverse and deduplicated data sources, our processing methodology enhances raw location signals with quality validation and forensic analysis, creating actionable intelligence products while maintaining strict privacy safeguards. This approach provides security and intelligence professionals with reliable location data while respecting individual privacy rights and adhering to relevant regulatory frameworks.

Built for Mission-Critical Applications

Venntel's platform supports the complete analytical lifecycle, from initial data exploration through production deployment, enabling organizations to focus on mission outcomes rather than data engineering challenges. Our processed data products deliver enriched analytics on each signal, reducing technical complexity and enabling security professionals to focus on analysis rather than data validation. The Venntel team is comprised of tradecraft experts who offer a unique blend of mission and technical experience and are committed to ensuring your success.

Partner with Venntel for Location Intelligence

Venntel serves as the location intelligence partner for organizations requiring reliable, privacy-compliant mobility data for mission-critical applications. Our platform processes billions of daily signals from diverse sources, delivering validated location intelligence that supports operational planning, threat detection, and strategic analysis.

We specialize in solving the complex technical challenges that analysts and data teams face when working with location data, from multi-source integration and quality validation to privacy compliance and signal analysis. Organizations leverage our intelligence to support border security operations, critical infrastructure protection, emergency response coordination, investigative analysis, and more.

Want to explore how curated location intelligence can help your organization? Click here to chat with an expert.

FAQs

How is location data collected with privacy protections?

Location data is collected through opt-in consent mechanisms in mobile applications. Users explicitly agree to share their location through app permissions. Responsible providers like Venntel implement comprehensive privacy controls including sensitive location filtering, regulatory compliance screening, and the removal of data from protected areas such as healthcare facilities and places of worship.

What makes location data reliable for analytical applications?

Reliable location data undergoes extensive processing to validate movement patterns, eliminate duplicates, and flag potentially problematic data. Quality providers implement forensic analysis techniques that identify replay data, device spoofing, and implausible movement patterns, helping analysts focus on genuine signals rather than noise.

How does location data support government operations?

Government agencies use location data for open source intelligence (OSINT) operations, emergency response coordination, urban planning, and investigative analysis. The data provides objective visibility into population movements, activity patterns, and behavioral trends that inform operational decisions and resource allocation. Intelligence analysts integrate location data with other open-source information to support investigations into transnational threats, while emergency managers and urban planners use mobility patterns to optimize resource deployment and infrastructure investments.

What's the difference between raw location signals and processed intelligence?

Raw location signals are unvalidated data points that may include significant percentages of unreliable or synthetic data. Processed location intelligence has undergone quality validation, deduplication, enrichment, and privacy screening, transforming raw signals into curated data that analysts can trust for mission-critical applications.